Beyond Sonification

1. Introduction

The sonification of bio signals (converting data into sound) is not necessarily a new concept and many people have used sonification for variety of applications. Beyond simple performance art, there are many medical applications such as physiological processes for diagnosis, anesthesiological, intensive-care or exercise monitoring just to name a few (1). The majority of these sonifications would more aptly be called audifications since the data is directly translated into equivalent frequencies. In my research, it seems that sonification is more so associated with a creative approach of turning a data set into something a little bit more musical. Using an EEG specifically to create music has largely been simple sonification by scaling the brain waves and assigning them to frequencies using tone generators. In my project, I have been able to not only interpret and sonify emotion, but create real-time cognitive control over audiovisual composition using a consumer EEG from the company Emotiv and the graphical programming language Max/MSP/Jitter.

2. Elements

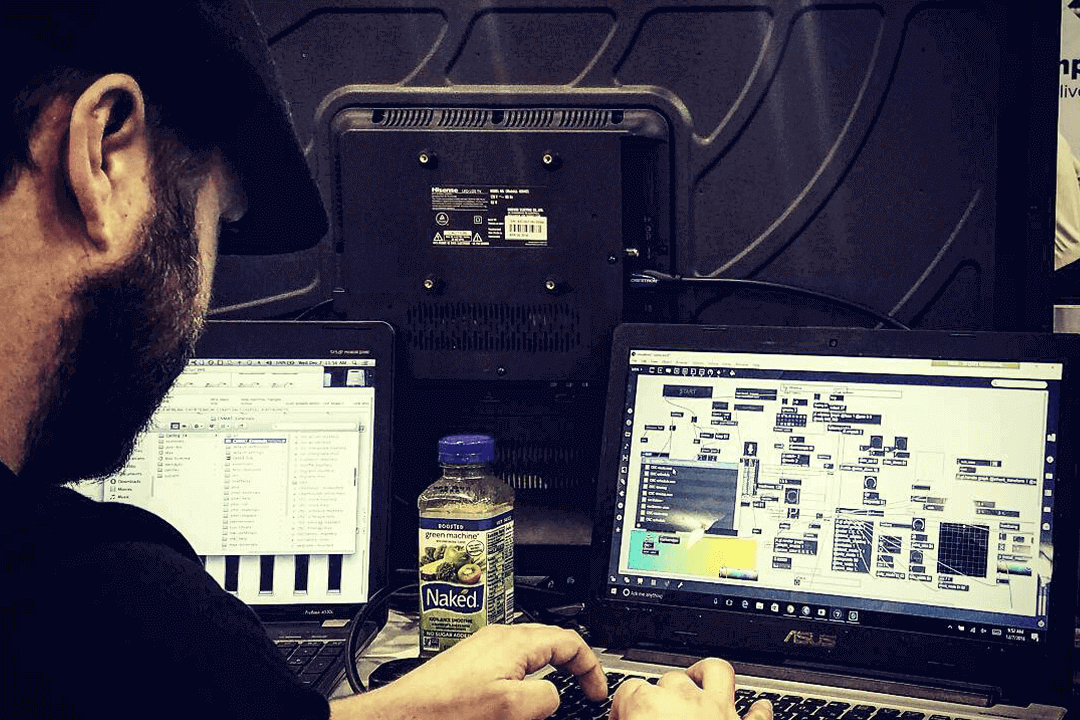

I have used a large variety of hardware and software to achieve my goals and, when necessary, designed and built my own hardware and software. Some of the key components are as follows.

2.1 EEG

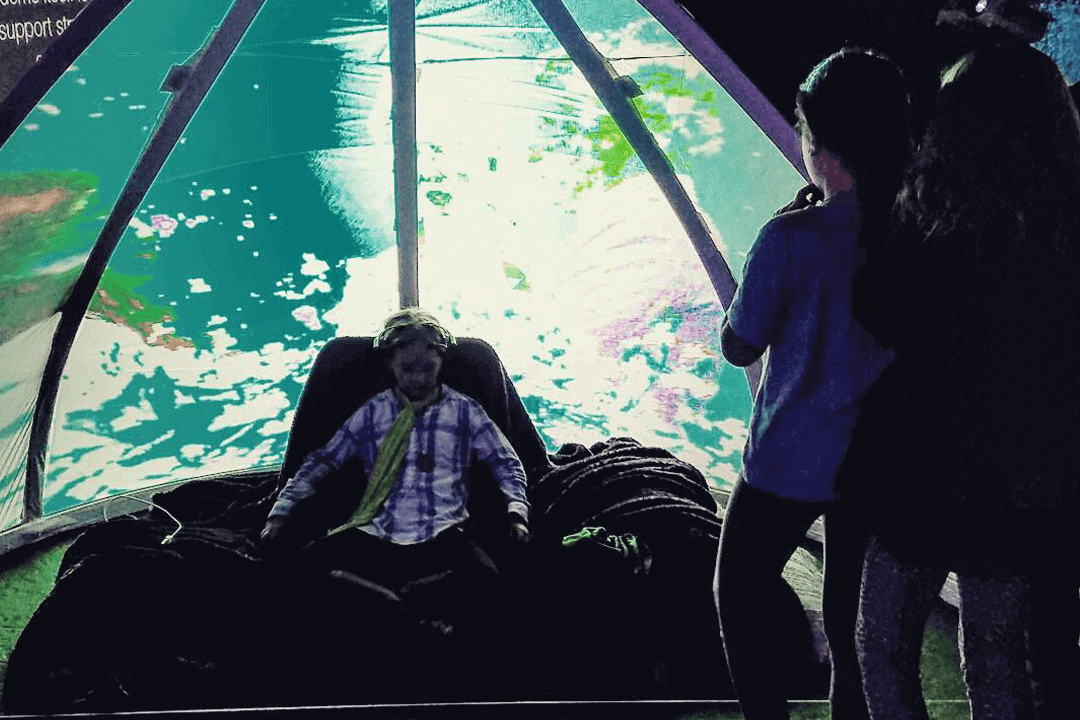

The EEG headset that I use is called the Emotiv EEG (Figure 0). It is a wireless “high resolution, multi-channel, portable system which has been designed for practical research applications” (2). It’s fully featured, consumer priced and comes with host software that has several suites capable of interpreting cognitive, affective, and facial expressions.

The headset incredibly accurate with 14 sensors and 2 reference sensors. The algorithms and tech specs rival EEGs that cost thousands. The accuracy can be visualized with additional software as you monitor the location of brain activity and the level in intensity of the four main brain wave frequencies – beta (>13 Hz) associated with excitation/alertness, alpha (8-13 Hz) associated with relaxation, theta (4-8 Hz) and delta (0.5-4 Hz) associated with deep sleep. All of which are a result of neural synaptic firing as measured by their frequency and location of the activity in the brain (10). The Emotiv EEG measures all of this very well and I can export the data as seen in (Figure 0.1) to repurpose for my experiments.

“The Expressiv suite uses the signals measured by the neuroheadset to interpret player the users’ facial expressions in real-time…” (Figure 1.1). There are many different facial expressions that can be monitored and interpreted by the software suite, such as raising or furrowing brow, blink, look left or right, smile, smirk right or left and clenching your teeth. I tend to use these data streams to immediately trigger parts of my software program that I need to have happen exactly when I want them to. The subsequent software suites can be used for consciously controlling triggers but it’s not as fast as a facial expression.

“The Affectiv suite monitors the users emotional states in real-time. It enables an extra dimension in interaction by allowing the computer to respond to a user’s emotions…” It monitors the user levels of engagement, boredom, excitement, frustration and meditation level in real time. It also has the ability to monitor these elements in the long-term with user definable time frames as pictured in a line graph of intensity over time.

The accuracy of emotion detection may seem subjective but it’s fairly accurate. With some algorithmic models, up to 92.3%. As stated in (7) using the bipolar model where arousal and valence dimensions are considered. “The arousal dimension ranges from not aroused to excited, and the valence dimension ranges from negative to positive. The dimensional model is preferable in emotion recognition experiments due to the following advantage:

dimensional model can locate discrete emotions in its space, even when no particular label can be used to define a certain feeling…Support Vector Machine (SVM) approach was employed to classify the data into different emotion modes. The result was 82.37% accuracy to distinguish the feeling of joy, sadness, anger and pleasure. A performance rate of 92.3% was obtained using Binary Linear Fisher’s Discriminant Analysis and emotion states among positive/arousal, positive/calm, negative/calm and negative/arousal were differed.”

As high quality EEGs started becoming affordable, a small handful of researchers and artists have been trying to make or control music with emotion. Most are simple sonifications but a few have attempted to algorithmically compose instrumental music. For example, in (8) the program “takes as input raw EEG data, and attempts to output a piano composition and performance which expresses the estimated emotional content of the EEG data.” Othershave used emotions as read by the EEG to control synthesizers and trigger loops (9).

To read the complete paper, please contact me for a request. I will get back to you within 24hours. Request Here

← Back